1.5 信息安全专业英语

1.5.1 Cryptography

1.Reading Materials

Cryptography [1] is the practice and study of hiding information. In modern times,cryptography is considered a branch of both mathematics and computer science,and is affiliated closely with information theory,computer security,and engineering. Cryptography is used in applications present in technologically advanced societies; examples include the security of ATM cards,computer passwords,and electronic commerce,which all depend on cryptography.

(1)Terminology

Until modern times,cryptography referred almost exclusively to encryption,the process of converting ordinary information(plaintext)into unintelligible gibberish(i.e.,ciphertext). [2] Decryption is the reverse,moving from unintelligible ciphertext to plaintext. A cipher(or cypher)is a pair of algorithms which creates the encryption and the reversing decryption. The detailed operation of a cipher is controlled both by the algorithm and,in each instance,by a key. This is a secret parameter(ideally,known only to the communicants)for a specific message exchange context. Keys are important,as ciphers without variable keys are trivially breakable and therefore less than useful for most purposes. Historically,ciphers were often used directly for encryption or decryption,without additional procedures such as authentication or integrity checks.

In colloquial use,the term "code" is often used to mean any method of encryption or concealment of meaning. However,in cryptography,code has a more specific meaning; it means the replacement of a unit of plaintext(i.e.,a meaningful word or phrase)with a code word(for example,apple pie replaces attack at dawn). Codes are no longer used in serious cryptography—except incidentally for such things as unit designations(e.g.,Bronco Flight or Operation Overlord)—- since properly chosen ciphers are both more practical and more secure than even the best codes,and better adapted to computers as well.

Some use the terms cryptography and cryptology interchangeably in English,while others use cryptography to refer specifically to the use and practice of cryptographic techniques,and cryptology to refer to the combined study of cryptography and cryptanalysis. [3] [4]

The study of characteristics of languages which have some application in cryptology,i.e. frequency data,letter combinations,universal patterns,etc. is called Cryptolinguistics.

(2)Modern cryptography

The modern field of cryptography can be divided into several areas of study. The chief ones are discussed here; see Topics in Cryptography for more.

(3)Symmetric-key cryptography

Symmetric-key cryptography refers to encryption methods in which both the sender and receiver share the same key(or,less commonly,in which their keys are different,but related in an easily computable way). This was the only kind of encryption publicly known until June 1976. [8]

One round(out of 8.5)of the patented IDEA cipher,used in some versions of PGP for high-speed encryption of,for instance,e-mail.

The modern study of symmetric-key ciphers relates mainly to the study of block ciphers and stream ciphers and to their applications. A block cipher is,in a sense,a modern embodiment of Alberti’s polyalphabetic cipher: block ciphers take as input a block of plaintext and a key,and output a block of ciphertext of the same size. Since messages are almost always longer than a single block,some method of knitting together successive blocks is required. Several have been developed,some with better security in one aspect or another than others. They are the mode of operations and must be carefully considered when using a block cipher in a cryptosystem.

The Data Encryption Standard(DES)and the Advanced Encryption Standard(AES)are block cipher designs which have been designated cryptography standards by the US government(though DES’s designation was finally withdrawn after the AES was adopted). [10] Despite its deprecation as an official standard,DES(especially its still-approved and much more secure triple-DES variant)remains quite popular; it is used across a wide range of applications,from ATM encryption [11] to e-mail privacy [12] and secure remote access. [13] Many other block ciphers have been designed and released,with considerable variation in quality. Many have been thoroughly broken. See Category:Block ciphers. [9] [14]

Stream ciphers,in contrast to the ‘block’ type,create an arbitrarily long stream of key material,which is combined with the plaintext bit-by-bit or character-by-character,somewhat like the one-time pad. In a stream cipher,the output stream is created based on an internal state which changes as the cipher operates. That state change is controlled by the key,and,in some stream ciphers,by the plaintext stream as well. RC4 is an example of a well-known,and widely used,stream cipher; see Category:Stream ciphers. [9]

Cryptographic hash functions(often called message digest functions)do not necessarily use keys,but are a related and important class of cryptographic algorithms. They take input data(often an entire message),and output a short,fixed length hash,and do so as a one-way function. For good ones,collisions(two plaintexts which produce the same hash)are extremely difficult to find.

Message authentication codes(MACs)are much like cryptographic hash functions,except that a secret key is used to authenticate the hash value [9] on receipt. These block an attack against plain hash functions.

(4)Public-key cryptography

Symmetric-key cryptosystems use the same key for encryption and decryption of a message,though a message or group of messages may have a different key than others. A significant disadvantage of symmetric ciphers is the key management necessary to use them securely. Each distinct pair of communicating parties must,ideally,share a different key,and perhaps each ciphertext exchanged as well. The number of keys required increases as the square of the number of network members,which very quickly requires complex key management schemes to keep them all straight and secret. The difficulty of securely establishing a secret key between two communicating parties,when a secure channel doesn’t already exist between them,also presents a chicken-and-egg problem which is a considerable practical obstacle for cryptography users in the real world.

Whitfield Diffie and Martin Hellman,authors of the first paper on public-key cryptography.

In a groundbreaking 1976 paper,Whitfield Diffie and Martin Hellman proposed the notion of public-key(also,more generally,called asymmetric key)cryptography in which two different but mathematically related keys are used — a public key and a private key. [15] A public key system is so constructed that calculation of one key(the ‘private key’)is computationally infeasible from the other(the ‘public key’),even though they are necessarily related. Instead,both keys are generated secretly,as an interrelated pair. [16] The historian David Kahn described public-key cryptography as "the most revolutionary new concept in the field since polyalphabetic substitution emerged in the Renaissance". [17]

In public-key cryptosystems,the public key may be freely distributed,while its paired private key must remain secret. The public key is typically used for encryption,while the private or secret key is used for decryption. Diffie and Hellman showed that public-key cryptography was possible by presenting the Diffie-Hellman key exchange protocol. [8]

In 1978,Ronald Rivest,Adi Shamir,and Len Adleman invented RSA,another public-key system. [18]

In 1997,it finally became publicly known that asymmetric key cryptography had been invented by James H. Ellis at GCHQ,a British intelligence organization,and that,in the early 1970s,both the Diffie-Hellman and RSA algorithms had been previously developed(by Malcolm J. Williamson and Clifford Cocks,respectively). [19]

The Diffie-Hellman and RSA algorithms,in addition to being the first publicly known examples of high quality public-key algorithms,have been among the most widely used. Others include the Cramer-Shoup cryptosystem,ElGamal encryption,and various elliptic curve techniques. See Category:Asymmetric-key cryptosystems.

Padlock icon from the Firefox Web browser,meant to indicate a page has been sent in SSL or TLS-encrypted protected form. However,such an icon is not a guarantee of security; any subverted browser might mislead a user by displaying such an icon when a transmission is not actually being protected by SSL or TLS.

In addition to encryption,public-key cryptography can be used to implement digital signature schemes. A digital signature is reminiscent of an ordinary signature; they both have the characteristic that they are easy for a user to produce,but difficult for anyone else to forge. Digital signatures can also be permanently tied to the content of the message being signed; they cannot then be ‘moved’ from one document to another,for any attempt will be detectable. In digital signature schemes,there are two algorithms: one for signing,in which a secret key is used to process the message(or a hash of the message,or both),and one for verification,in which the matching public key is used with the message to check the validity of the signature. RSA and DSA are two of the most popular digital signature schemes. Digital signatures are central to the operation of public key infrastructures and many network security schemes(eg,SSL/TLS,many VPNs,etc). [14]

Public-key algorithms are most often based on the computational complexity of "hard" problems,often from number theory. For example,the hardness of RSA is related to the integer factorization problem,while Diffie-Hellman and DSA are related to the discrete logarithm problem. More recently,elliptic curve cryptography has developed in which security is based on number theoretic problems involving elliptic curves. Because of the difficulty of the underlying problems,most public-key algorithms involve operations such as modular multiplication and exponentiation,which are much more computationally expensive than the techniques used in most block ciphers,especially with typical key sizes. As a result,public-key cryptosystems are commonly hybrid cryptosystems,in which a fast high-quality symmetric-key encryption algorithm is used for the message itself,while the relevant symmetric key is sent with the message,but encrypted using a public-key algorithm. Similarly,hybrid signature schemes are often used,in which a cryptographic hash function is computed,and only the resulting hash is digitally signed. [9]

(5)Cryptanalysis

The goal of cryptanalysis is to find some weakness or insecurity in a cryptographic scheme,thus permitting its subversion or evasion.

It is a commonly held misconception that every encryption method can be broken. In connection with his WWII work at Bell Labs,Claude Shannon proved that the one-time pad cipher is unbreakable,provided the key material is truly random,never reused,kept secret from all possible attackers,and of equal or greater length than the message. [20] Most ciphers,apart from the one-time pad,can be broken with enough computational effort by brute force attack,but the amount of effort needed may be exponentially dependent on the key size,as compared to the effort needed to use the cipher. In such cases,effective security could be achieved if it is proven that the effort required(i.e.,"work factor",in Shannon’s terms)is beyond the ability of any adversary. This means it must be shown that no efficient method(as opposed to the time-consuming brute force method)can be found to break the cipher. Since no such showing can be made currently,as of today,the one-time-pad remains the only theoretically unbreakable cipher.

There are a wide variety of cryptanalytic attacks,and they can be classified in any of several ways. A common distinction turns on what an attacker knows and what capabilities are available. In a ciphertext-only attack,the cryptanalyst has access only to the ciphertext(good modern cryptosystems are usually effectively immune to ciphertext-only attacks). In a known-plaintext attack,the cryptanalyst has access to a ciphertext and its corresponding plaintext(or to many such pairs). In a chosen-plaintext attack,the cryptanalyst may choose a plaintext and learn its corresponding ciphertext(perhaps many times); an example is gardening,used by the British during WWII. Finally,in a chosen-ciphertext attack,the cryptanalyst may be able to choose ciphertexts and learn their corresponding plaintexts. [9] Also important,often overwhelmingly so,are mistakes(generally in the design or use of one of the protocols involved; see Cryptanalysis of the Enigma for some historical examples of this).

Cryptanalysis of symmetric-key ciphers typically involves looking for attacks against the block ciphers or stream ciphers that are more efficient than any attack that could be against a perfect cipher. For example,a simple brute force attack against DES requires one known plaintext and 255 decryptions,trying approximately half of the possible keys,to reach a point at which chances are better than even the key sought will have been found. But this may not be enough assurance; a linear cryptanalysis attack against DES requires 243 known plaintexts and approximately 243 DES operations. [21] This is a considerable improvement on brute force attacks.

Public-key algorithms are based on the computational difficulty of various problems. The most famous of these is integer factorization(e.g.,the RSA algorithm is based on a problem related to integer factoring),but the discrete logarithm problem is also important. Much public-key cryptanalysis concerns numerical algorithms for solving these computational problems,or some of them,efficiently(ie,in a practical time). For instance,the best known algorithms for solving the elliptic curve-based version of discrete logarithm are much more time-consuming than the best known algorithms for factoring,at least for problems of more or less equivalent size. Thus,other things being equal,to achieve an equivalent strength of attack resistance,factoring-based encryption techniques must use larger keys than elliptic curve techniques. For this reason,public-key cryptosystems based on elliptic curves have become popular since their invention in the mid-1990s.

While pure cryptanalysis uses weaknesses in the algorithms themselves,other attacks on cryptosystems are based on actual use of the algorithms in real devices,and are called side-channel attacks. If a cryptanalyst has access to,say,the amount of time the device took to encrypt a number of plaintexts or report an error in a password or PIN character,he may be able to use a timing attack to break a cipher that is otherwise resistant to analysis. An attacker might also study the pattern and length of messages to derive valuable information; this is known as traffic analysis, [22] and can be quite useful to an alert adversary. Poor administration of a cryptosystem,such as permitting too short keys,will make any system vulnerable,regardless of other virtues. And,of course,social engineering,and other attacks against the personnel who work with cryptosystems or the messages they handle(e.g.,bribery,extortion,blackmail,espionage,torture,...)may be the most productive attacks of all.

(6)Cryptographic primitives

Much of the theoretical work in cryptography concerns cryptographic primitives — algorithms with basic cryptographic properties — and their relationship to other cryptographic problems. More complicated cryptographic tools are then built from these basic primitives. Complex functionality in an application must be built in using combinations of these algorithms and assorted protocols. Such combinations are called cryptosystems and it is they which users actually encounter. Examples include PGP and its variants,ssh,SSL/TLS,all PKIs,digital signatures,etc For example,a one-way function is a function intended to be easy to compute but hard to invert.

But note that,in a very general sense,for any cryptographic application to be secure(if based on computational feasibility assumptions),one-way functions must exist. However,if one-way functions exist,this implies that P ≠ NP. [3] Since the P versus NP problem is currently unsolved,it is not known if one-way functions really do exist. For instance,if one-way functions exist,then secure pseudorandom generators and secure pseudorandom functions exist. [23]

Other cryptographic primitives include the encryption algorithms themselves,one-way permutations,trapdoor permutations,etc.

(7)Cryptographic protocols

In many cases,cryptographic techniques involve back and forth communication among two or more parties in space(e.g.,between the home office and a branch office)or across time(e.g.,cryptographically protected backup data). The term cryptographic protocol captures this general idea.

Cryptographic protocols have been developed for a wide range of problems,including relatively simple ones like interactive proof systems, [24] secret sharing, [25] [26] and zero-knowledge proofs, [27] and much more complex ones like electronic cash [28] and secure multiparty computation. [29]

When the security of a good cryptographic system fails,it is rare that the vulnerability leading to the breach will have been in a quality cryptographic primitive. Instead,weaknesses are often mistakes in the protocol design(often due to inadequate design procedures,or less than thoroughly informed designers),in the implementation(e.g.,a software bug),in a failure of the assumptions on which the design was based(e.g.,proper training of those who will be using the system),or some other human error.

Many cryptographic protocols have been designed and analyzed using ad hoc methods,but they rarely have any proof of security,leaving aside the effects of humans in their operations. Methods for formally analyzing the security of protocols,based on techniques from mathematical logic(see for example BAN logic),and more recently from concrete security principles,have been the subject of research for the past few decades. [30] [31] [32] Unfortunately,to date these tools have been cumbersome and are not widely used for complex designs.

The study of how best to implement and integrate cryptography in applications is itself a distinct field,see: cryptographic engineering and security engineering.

2.Key Words

[1] plaintext: In cryptography,plaintext is the information which the sender wishes to transmit to the receiver(s).

[2] encryption: In cryptography,encryption is the process of transforming information(referred to as plaintext)using an algorithm(called cipher)to make it unreadable to anyone except those possessing special knowledge,usually referred to as a key. The result of the process is encrypted information(in cryptography,referred to as ciphertext).

[3] cipher: In cryptography,a cipher(or cypher)is an algorithm for performing encryption and decryption — a series of well-defined steps that can be followed as a procedure.

[4] key: In cryptography,a key is a piece of information(a parameter)that determines the functional output of a cryptographic algorithm. Without a key,the algorithm would have no result.

[5] digital signature: A digital signature or digital signature scheme is a type of asymmetric cryptography used to simulate the security properties of a handwritten signature on paper. Digital signature schemes consist of at least three algorithms: a key generation algorithm,a signature algorithm,and a verification algorithm.

[6] message authentication code(MAC): A cryptographic message authentication code(MAC)is a short piece of information used to authenticate a message. A MAC algorithm accepts as input a secret key and an arbitrary-length message to be authenticated,and outputs a MAC(sometimes known as a tag).

(7)brute force attack: In cryptanalysis,a brute force attack is a method of defeating a cryptographic scheme by trying a large number of possibilities; for example,possible keys in order to decrypt a message.

(8)quantum computer: A quantum computer is a device for computation that makes direct use of distinctively quantum mechanical phenomena,such as superposition and entanglement,to perform operations on data. In a classical(or conventional)computer,information is stored as bits; in a quantum computer,it is stored as qubits(quantum binary digits). The basic principle of quantum computation is that the quantum properties can be used to represent and structure data,and that quantum mechanisms can be devised and built to perform operations with this data.(woo) [1]

3.References

[1] Liddell and Scott’s Greek-English Lexicon. Oxford University Press. 1984.

[2] David Kahn. The Codebreakers,1967,ISBN 0-684-83130-9.

[3] a b c Oded Goldreich. Foundations of Cryptography. Volume 1: Basic Tools. Cambridge University Press,2001,ISBN 0-521-79172-3.

[4] Cryptology(definition). Merriam-Webster’s Collegiate Dictionary(11th edition). Merriam-Webster,Retrieved on 2008-02-01.

[5] Kama Sutra. Sir Richard F. Burton,translator. Part I,Chapter III,44th and 45th arts.

[6] Hakim,Joy(1995). A History of Us: War,Peace and all that Jazz. New York: Oxford University Press. ISBN 0-19-509514-6.

[7] James Gannon. Stealing Secrets,Telling Lies: How Spies and Codebreakers Helped Shape the Twentieth Century,Washington,D.C.,Brassey’s,2001,ISBN 1-57488-367-4.

[8] a b c Whitfield Diffie and Martin Hellman. New Directions in Cryptography. IEEE Transactions on Information Theory. vol. IT-22,Nov. 1976,pp: 644–654.

[9] a b c d e f AJ Menezes. PC van Oorschot,SA Vanstone. Handbook of Applied Cryptography,ISBN 0-8493-8523-7.

[10] FIPS PUB 197: The official Advanced Encryption Standard.

[11] NCUA letter to credit unions,July 2004.

[12] RFC 2440 - Open PGP Message Format.

[13] SSH at windowsecurity.com by Pawel Golen,July 2004.

[14] a b Bruce Schneier. Applied Cryptography,2nd edition,Wiley,1996,ISBN 0-471-11709-9.

[15] Whitfield Diffie and Martin Hellman,“Multi-user cryptographic techniques” Diffie and Hellman. AFIPS Proceedings 45,pp109–112,June 8,1976.

[16] Ralph Merkle was working on similar ideas at the time and encountered publication delays,and Hellman has suggested that the term used should be Diffie-Hellman-Merkle aysmmetric key cryptography.

[17] David Kahn.“Cryptology Goes Public”,58 Foreign Affairs 141,151(fall 1979),p. 153.

[18] R. Rivest,A. Shamir,L. Adleman. A Method for Obtaining Digital Signatures and Public-Key Cryptosystems. Communications of the ACM,Vol. 21(2),pp.120–126. 1978. Previously released as an MIT“Technical Memo” in April 1977,and published in Martin Gardner’s Scientific American Mathematical Recreations column.

[19] Clifford Cocks. A Note on Non-Secret Encryption. CESG Research Report,20 November 1973.

[20] Shannon: Claude Shannon and Warren Weaver. The Mathematical Theory of Communication. University of Illinois Press,1963,ISBN 0-252-72548-4

[21] Pascal Junod.“On the Complexity of Matsui’s Attack”. SAC 2001.

[22] Dawn Song,David Wagner,and Xuqing Tian,Timing Analysis of Keystrokes and Timing Attacks on SSH. In Tenth USENIX Security Symposium,2001.

[23] J. Håstad,R. Impagliazzo,L.A. Levin,and M. Luby. A Pseudorandom Generator From Any One-Way Function. SIAM J. Computing,vol. 28 num. 4,pp 1364–1396,1999.

[24] László Babai. Trading group theory for randomness. Proceedings of the Seventeenth Annual Symposium on the Theory of Computing,ACM,1985.

[25] G. Blakley. Safeguarding cryptographic keys. In Proceedings of AFIPS 1979,volume 48,pp. 313–317,June 1979.

[26] A. Shamir. How to share a secret. In Communications of the ACM,volume 22,pp. 612–613,ACM,1979.

[27] S. Goldwasser,S. Micali,and C. Rackoff. The Knowledge Complexity of Interactive Proof Systems. SIAM J. Computing,vol. 18,num. 1,pp. 186–208,1989.

[28] S. Brands. Untraceable Off-line Cash in Wallets with Observers. In Advances in Cryptology — Proceedings of CRYPTO,Springer-Verlag,1994.

[29] R. Canetti. Universally composable security: a new paradigm for cryptographic protocols. In Proceedings of the 42nd annual Symposium on the Foundations of Computer Science(FOCS),pp. 136–154,IEEE,2001.

[30] D. Dolev and A. Yao. On the security of public key protocols. IEEE transactions on information theory,vol. 29 num. 2,pp. 198–208,IEEE,1983.

[31] M. Abadi and P. Rogaway. Reconciling two views of cryptography(the computational soundness of formal encryption). In IFIP International Conference on Theoretical Computer Science(IFIP TCS 2000),Springer-Verlag,2000.

[32]

D. Song. Athena,an automatic checker for security protocol analysis. In Proceedings of the 12th IEEE Computer Security Foundations Workshop(CSFW),IEEE,1999.

1.5.2 Network Security

1.Reading Materials

Network security consists of the provisions made in an underlying computer network infrastructure,policies adopted by the network administrator to protect the network and the network-accessible resources from unauthorized access and the effectiveness(or lack)of these measures combined together.

(1)Comparison with computer security

Securing network infrastructure is like securing possible entry points of attacks on a country by deploying appropriate defense. Computer security is more like providing means to protect a single PC against outside intrusion. The former is better and practical to protect the civilians from getting exposed to the attacks. The preventive measures attempt to secure the access to individual computers--the network itself—thereby protecting the computers and other shared resources such as printers,network-attached storage connected by the network. Attacks could be stopped at their entry points before they spread. As opposed to this,in computer security the measures taken are focused on securing individual computer hosts. A computer host whose security is compromised is likely to infect other hosts connected to a potentially unsecured network. A computer host’s security is vulnerable to users with higher access privileges to those hosts.

(2)Attributes of a secure network

Network security starts from authenticating any user,most likely a username and a password. Once authenticated,a stateful firewall enforces access policies such as what services are allowed to be accessed by the network users. [33] Though effective to prevent unauthorized access,this component fails to check potentially harmful contents such as computer worms being transmitted over the network. An intrusion prevention system(IPS) [34] helps detect and prevent such malware. IPS also monitors for suspicious network traffic for contents,volume and anomalies to protect the network from attacks such as denial of service. Communication between two hosts using the network could be encrypted to maintain privacy. Individual events occurring on the network could be tracked for audit purposes and for a later high level analysis.

Honeypots,essentially decoy network-accessible resources,could be deployed in a network as surveillance and early-warning tools. Techniques used by the attackers that attempt to compromise these decoy resources are studied during and after an attack to keep an eye on new exploitation techniques. Such analysis could be used to further tighten security of the actual network being protected by the honeypot. [35]

(3)Security management

Security Management for networks is different for all kinds of situations. A small home or an office would only require basic security while large businesses will require high maintenance and advanced software and hardware to prevent malicious attacks from hacking and spamming.

Small homes

• A basic firewall.

• For Windows users,basic Antivirus software like McAfee,Norton AntiVirus,AVG Antivirus or Windows Defender,others may suffice if they contain a virus scanner to scan for malicious software.

• When using a wireless connection,use a robust password.

Medium businesses

• A fairly strong firewall

• A strong Antivirus software and Internet Security Software.

• For authentication,use strong passwords and change it on a bi-weekly/monthly basis.

• When using a wireless connection,use a robust password.

• Raise awareness about physical security to employees.

• Use an optional network analyzer or network monitor.

Large businesses

• A strong firewall and proxy to keep unwanted people out.

• A strong Antivirus software and Internet Security Software.

• For authentication,use strong passwords and change it on a weekly/bi-weekly basis.

• When using a wireless connection,use a robust password.

• Exercise physical security precautions to employees.

• Prepare a network analyzer or network monitor and use it when needed.

• Implement physical security management like closed circuit television for entry areas and restricted zones.

• Security fencing to mark the company’s perimeter.

• Fire extinguishers for fire-sensitive areas like server rooms and security rooms.

• Security guards can help to maximize security.

School

• An adjustable firewall and proxy to allow authorized users access from the outside and inside.

• A strong Antivirus software and Internet Security Software.

• Wireless connections that lead to firewalls.

• CIPA compliance.

• Supervision of network to guarantee updates and changes based on popular site usage.

• Constant supervision by teachers,librarians,and administrators to guarantee protection against attacks by both internet and sneakernet sources.

Large Government

• A strong strong firewall and proxy to keep unwanted people out.

• A strong Antivirus software and Internet Security Software.

• Strong encryption,usually with a 256 bit key.

• Whitelist authorized wireless connection,block all else.

• All network hardware is in secure zones.

• All host should be on a private network that is invisible from the outside.

• Put all servers in a DMZ,or a firewall from the outside and from the inside.

• Security fencing to mark perimeter and set wireless range to this.

2.Key Words

authorization: is the concept of allowing access to resources only to those permitted to use them. More formally,authorization is a process(often part of the operating system)that protects computer resources by only allowing those resources to be used by resource consumers that have been granted authority to use them. Resources include individual files’ or items’ data,computer programs,computer devices and functionality provided by computer applications.

Network-attached storage(NAS): is file-level computer data storage connected to a computer network providing data access to heterogeneous network clients.

Authentication: is the act of establishing or confirming something(or someone)as authentic,that is,that claims made by or about the thing are true. This might involve confirming the identity of a person,the origins of an artifact,or assuring that a computer program is a trusted one.

A computer worm: is a self-replicating computer program. It uses a network to send copies of itself to other nodes(computer terminals on the network)and it may do so without any user intervention. Unlike a virus,it does not need to attach itself to an existing program. Worms almost always cause harm to the network,if only by consuming bandwidth,whereas viruses almost always corrupt or modify files on a targeted computer.

A denial-of-service attack(DoS attack)or distributed denial-of-service attack(DDoS attack): is an attempt to make a computer resource unavailable to its intended users. Although the means to carry out,motives for,and targets of a DoS attack may vary,it generally consists of the concerted,malevolent efforts of a person or persons to prevent an Internet site or service from functioning efficiently or at all,temporarily or indefinitely. Perpetrators of DoS attacks typically target sites or services hosted on high-profile web servers such as banks,credit card payment gateways,and even DNS root servers.

3.References

[33] A Role-Based Trusted Network Provides Pervasive Security and Compliance - interview with Jayshree Ullal,senior VP of Cisco.

[34] Dave Dittrich. Network monitoring/Intrusion Detection Systems(IDS). University of Washington.

[35]

Honeypots,Honeynets. Security of the Internet(The Froehlich/Kent Encyclopedia of Telecommunications vol. 15. Marcel Dekker,New York,1997,pp. 231-255.)Introduction to Network Security,Matt Curtin.

1.5.3 Application Security

1.Reading Materials

Application security encompasses measures taken to prevent exceptions in the security policy of an application or the underlying system(vulnerabilities)through flaws in the design,development,or deployment of the application.

Applications only control the use of resources granted to them,and not which resources are granted to them. They,in turn,determine the use of these resources by users of the application through application security.

(1)Methodology

According to the patterns & practices Improving Web Application Security book,a principle-based approach for application security includes: [36]

• Know your threats

• Secure the network,host and application

• Bake security into your application life cycle

Note that this approach is technology / platform independent. It is focused on principles,patterns,and practices.

For more information on a principle-based approach to application security,see patterns & practices Application Security Methodology.

(2)Threats,Attacks,Vulnerabilities,and Countermeasures

According to the patterns & practices Improving Web Application Security book,the following terms are relevant to application security: [36]

• Asset. A resource of value such as the data in a database or on the file system,or a system resource.

• Threat. A negative effect.

• Vulnerability. A weakness that makes a threat possible.

• Attack(or exploit). An action taken to harm an asset.

• Countermeasure. A safeguard that addresses a threat and mitigates risk.

(3)Application Threats / Attacks

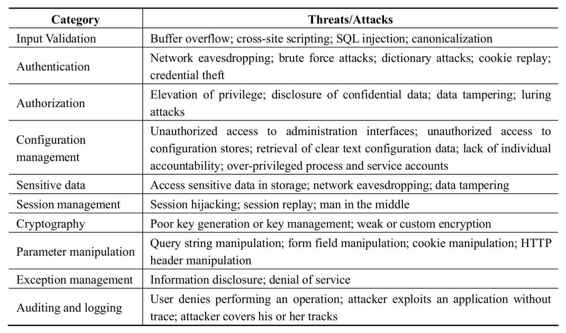

According to the patterns & practices Improving Web Application Security book,the following are classes of common application security threats / attacks: [36]

(4)Mobile Application Security

The proportion of mobile devices providing open platform functionality is expected to continue to increase as time move on. The openness of these platforms offers significant opportunities to all parts of the mobile eco-system by delivering the ability for flexible programmes and service delivery options that may be installed,removed or refreshed multiple times in line with the user’s needs and requirements. However,with openness comes responsibility and unrestricted access to mobile resources and APIs by applications of unknown or untrusted origin could result in damage to the user,the device,the network or all of these,if not managed by suitable security architectures and network precautions. Mobile Application Security is provided in some form on most open OS mobile devices(SymbianOS [37] ,Microsoft [citation needed],BREW,etc.). Industry groups have also created recommendations including the GSM Association and Open Mobile Terminal Platform(OMTP). [38]

(5)Security testing for applications

Security testing techniques scour for vulnerabilities or security holes in applications. These vulnerabilities leave applications open to exploitation. Ideally,security testing is implemented throughout the entire software development life cycle(SDLC)so that vulnerabilities may be addressed in a timely and thorough manner. Unfortunately,testing is often conducted as an afterthought at the end of the development cycle.

Vulnerability scanners,and more specifically web application scanners,otherwise known as penetration testing tools(i.e. ethical hacking tools)have been historically used by security organizations within corporations and security consultants to automate the security testing of http request/responses; however,this is not a substitute for the need for actual source code review. Physical code reviews of an application’s source code can be accomplished manually or in an automated fashion. Given the common size of individual programs(often 500K Lines of Code or more),the human brain can not execute a comprehensive data flow analysis needed in order to completely check all circuitous paths of an application program to find vulnerability points. The human brain is suited more for filtering,interrupting and reporting the outputs of automated source code analysis tools available commercially versus trying to trace every possible path through a compiled code base to find the root cause level vulnerabilities.

The two types of automated tools associated with application vulnerability detection(application vulnerability scanners)are Penetration Testing Tools(otherwise known as Black Box Testing Tools)and Source Code Analysis Tools(otherwise known as White Box Testing Tools). Tools in the Black Box Testing arena include Devfense,Watchfire,HP [39] (through the acquisition of SPI Dynamics [40] ),Cenzic,Nikto(open source),Grendel-Scan(open source),N-Stalker and Sandcat(freeware). Tools in the White Box Testing arena include Armorize Technologies,Fortify Software and Ounce Labs.

Banking and large E-Commerce corporations have been the very early adopter customer profile for these types of tools. It is commonly held within these firms that both Black Box testing and White Box testing tools are needed in the pursuit of application security. Typically sited,Black Box testing(meaning Penetration Testing tools)are ethical hacking tools used to attack the application surface to expose vulnerabilities suspended within the source code hierarchy. Penetration testing tools are executed on the already deployed application. White Box testing(meaning Source Code Analysis tools)are used by either the application security groups or application development groups. Typically introduced into a company through the application security organization,the White Box tools complement the Black Box testing tools in that they give specific visibility into the specific root vulnerabilities within the source code in advance of the source code being deployed. Vulnerabilities identified with White Box testing and Black Box testing are typically in accordance with the OWASP taxonomy for software coding errors. White Box testing vendors have recently introduced dynamic versions of their source code analysis methods; which operates on deployed applications. Given that the White Box testing tools have dynamic versions similar to the Black Box testing tools,both tools can be correlated in the same software error detection paradigm ensuring full application protection to the client company.

2.Key Words

(1)Vulnerability: is the susceptibility to physical or emotional injury or attack. It also means to have one’s guard down,open to censure or criticism; assailable. Vulnerability refers to a person’s state of being liable to succumb,as to persuasion or temptation.

(2)A countermeasure: is a system(usually for a military application)designed to prevent sensor-based weapons from acquiring and/or destroying a target.

Countermeasures that alter the electromagnetic,acoustic or other signature(s)of a target thereby altering the tracking and sensing behavior of an incoming threat(e.g.,guided missile)are designated softkill measures.

(3)Configuration management(CM) is a field of management that focuses on establishing and maintaining consistency of a product’s performance and its functional and physical attributes with its requirements,design,and operational information throughout its life. For information assurance,CM can be defined as the management of security features and assurances through control of changes made to hardware,software,firmware,documentation,test,test fixtures,and test documentation throughout the life cycle of an information system.s

(4)session management: is the process of keeping track of a user’s activity across sessions of interaction with the computer system.

(5)The Open Mobile Terminal Platform(OMTP): is a forum created by mobile network operators to discuss standards with manufacturers of cell phones and other mobile devices. Although dominated by network operators,the OMTP includes manufacturers such as Nokia,Samsung,Motorola,Sony Ericsson and LG Electronics.

3.References

[36] Improving Web Application Security: Threats and Countermeasures. published by Microsoft Corporation.

[37] Platform Security Concepts. Simon Higginson.

[38] Recommendations papers. Open Mobile Terminal Platform.

[39] Application security: Find web application security vulnerabilities during every phase of the software development lifecycle,HP center.

[40] HP acquires SPI Dynamics. CNET news.com.